Compare commits

No commits in common. "4.5.0" and "4.1.0" have entirely different histories.

6

VERSION

6

VERSION

|

|

@ -1,3 +1,3 @@

|

|||

4.5.0

|

||||

last_version: 4.4.0

|

||||

source_branch: feature/mirror-update-pr-10

|

||||

4.1.0

|

||||

last_version: 4.0.0

|

||||

source_branch: feature/SIENTIAPDE-1379

|

||||

|

|

|

|||

|

|

@ -0,0 +1,134 @@

|

|||

#!/bin/bash

|

||||

|

||||

# Applies current directory content as a patch to an existing git repository

|

||||

#

|

||||

# Usage: ./git-apply-patch.sh <config-file>

|

||||

#

|

||||

# Config file format:

|

||||

# GIT_URL=https://github.com/user/repo.git

|

||||

# GIT_USER=username

|

||||

# GIT_TOKEN=your_token_or_password

|

||||

#

|

||||

# VERSION file format:

|

||||

# <new_version>

|

||||

# last_version: <previous_version>

|

||||

# source_branch: <branch_name> (optional)

|

||||

|

||||

set -e

|

||||

|

||||

if [ -z "$1" ]; then

|

||||

echo "Usage: $0 <config-file>"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

CONFIG_FILE="$1"

|

||||

|

||||

if [ ! -f "$CONFIG_FILE" ]; then

|

||||

echo "Error: Config file '$CONFIG_FILE' not found"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

# Load config file

|

||||

source "$CONFIG_FILE"

|

||||

|

||||

# Validate required fields

|

||||

if [ -z "$GIT_URL" ]; then

|

||||

echo "Error: GIT_URL not defined in config file"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

if [ -z "$GIT_USER" ]; then

|

||||

echo "Error: GIT_USER not defined in config file"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

if [ -z "$GIT_TOKEN" ]; then

|

||||

echo "Error: GIT_TOKEN not defined in config file"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

# Read VERSION file

|

||||

if [ ! -f "VERSION" ]; then

|

||||

echo "Error: VERSION file not found in current directory"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

NEW_VERSION=$(sed -n '1p' VERSION)

|

||||

LAST_VERSION=$(sed -n '2p' VERSION | sed 's/last_version: //')

|

||||

SOURCE_BRANCH=$(sed -n '3p' VERSION | sed 's/source_branch: //')

|

||||

|

||||

if [ -z "$NEW_VERSION" ]; then

|

||||

echo "Error: New version not found in VERSION file (line 1)"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

if [ -z "$LAST_VERSION" ]; then

|

||||

echo "Error: last_version not found in VERSION file (line 2)"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

echo "New version: $NEW_VERSION"

|

||||

echo "Last version: $LAST_VERSION"

|

||||

echo "Source branch: ${SOURCE_BRANCH:-N/A}"

|

||||

|

||||

# Build authenticated URL

|

||||

if [[ "$GIT_URL" == https://* ]]; then

|

||||

URL_WITHOUT_PROTOCOL="${GIT_URL#https://}"

|

||||

AUTH_URL="https://${GIT_USER}:${GIT_TOKEN}@${URL_WITHOUT_PROTOCOL}"

|

||||

elif [[ "$GIT_URL" == http://* ]]; then

|

||||

URL_WITHOUT_PROTOCOL="${GIT_URL#http://}"

|

||||

AUTH_URL="http://${GIT_USER}:${GIT_TOKEN}@${URL_WITHOUT_PROTOCOL}"

|

||||

else

|

||||

echo "Error: URL must start with http:// or https://"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

# Create temp directory for cloning

|

||||

TEMP_DIR=$(mktemp -d)

|

||||

CURRENT_DIR=$(pwd)

|

||||

|

||||

echo ""

|

||||

echo "Cloning repository from branch '$LAST_VERSION'..."

|

||||

git clone --branch "$LAST_VERSION" --single-branch "$AUTH_URL" "$TEMP_DIR" 2>/dev/null || {

|

||||

echo "Branch '$LAST_VERSION' not found, trying to clone default branch..."

|

||||

git clone "$AUTH_URL" "$TEMP_DIR"

|

||||

cd "$TEMP_DIR"

|

||||

git checkout -b "$LAST_VERSION" 2>/dev/null || git checkout "$LAST_VERSION"

|

||||

cd "$CURRENT_DIR"

|

||||

}

|

||||

|

||||

echo "Copying current files to cloned repository..."

|

||||

# Copy all files except .git, temp dir, and config file

|

||||

rsync -av \

|

||||

--exclude='.git' \

|

||||

--exclude='test' \

|

||||

--exclude="$(basename "$TEMP_DIR")" \

|

||||

--exclude="$CONFIG_FILE" \

|

||||

"$CURRENT_DIR/" "$TEMP_DIR/"

|

||||

|

||||

cd "$TEMP_DIR"

|

||||

|

||||

echo "Creating new branch '$NEW_VERSION'..."

|

||||

git checkout -b "$NEW_VERSION" 2>/dev/null || git checkout "$NEW_VERSION"

|

||||

|

||||

echo "Adding changes..."

|

||||

git add -A

|

||||

|

||||

echo "Committing changes..."

|

||||

COMMIT_MSG="Release $NEW_VERSION"

|

||||

if [ -n "$SOURCE_BRANCH" ]; then

|

||||

COMMIT_MSG="$COMMIT_MSG (from $SOURCE_BRANCH)"

|

||||

fi

|

||||

git commit -m "$COMMIT_MSG" || echo "Nothing to commit"

|

||||

|

||||

echo "Pushing to remote..."

|

||||

git push -u origin "$NEW_VERSION"

|

||||

|

||||

# Cleanup

|

||||

cd "$CURRENT_DIR"

|

||||

rm -rf "$TEMP_DIR"

|

||||

|

||||

echo ""

|

||||

echo "Done! Patch applied and pushed to branch '$NEW_VERSION'"

|

||||

echo "Based on previous version: '$LAST_VERSION'"

|

||||

|

||||

|

|

@ -0,0 +1,8 @@

|

|||

# Git repository configuration

|

||||

# Copy this file and fill with your credentials

|

||||

# NEVER commit this file with real credentials!

|

||||

|

||||

GIT_URL=http://localhost:35703/aignosi/library-distribution-mirror.git

|

||||

GIT_USER=aignosi

|

||||

GIT_TOKEN=r8sA8CPHD9!bt6d

|

||||

|

||||

|

|

@ -1,53 +1,62 @@

|

|||

#!/bin/bash

|

||||

|

||||

# Initializes a git repository and pushes to a remote using credentials from arguments

|

||||

# Initializes a git repository and pushes to a remote using credentials from a config file

|

||||

#

|

||||

# Usage: ./git-init-from-config.sh <git-url> <git-user> <git-token>

|

||||

# Usage: ./git-init-from-config.sh <config-file>

|

||||

#

|

||||

# Arguments:

|

||||

# git-url - Repository URL (e.g., https://gitea.example.com/user/repo.git)

|

||||

# git-user - Git username

|

||||

# git-token - Git token or password

|

||||

# Config file format (one value per line):

|

||||

# GIT_URL=https://github.com/user/repo.git

|

||||

# GIT_USER=username

|

||||

# GIT_TOKEN=your_token_or_password

|

||||

#

|

||||

# The branch name will be read from the VERSION file (first line)

|

||||

|

||||

set -e

|

||||

|

||||

if [ "$#" -lt 3 ]; then

|

||||

echo "Usage: $0 <git-url> <git-user> <git-token>"

|

||||

if [ -z "$1" ]; then

|

||||

echo "Usage: $0 <config-file>"

|

||||

echo ""

|

||||

echo "Arguments:"

|

||||

echo " git-url - Repository URL (e.g., https://gitea.example.com/user/repo.git)"

|

||||

echo " git-user - Git username"

|

||||

echo " git-token - Git token or password"

|

||||

echo "Config file format:"

|

||||

echo " GIT_URL=https://github.com/user/repo.git"

|

||||

echo " GIT_USER=username"

|

||||

echo " GIT_TOKEN=your_token_or_password"

|

||||

echo " GIT_BRANCH=main (optional)"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

GIT_URL="$1"

|

||||

GIT_USER="$2"

|

||||

GIT_TOKEN="$3"

|

||||

CONFIG_FILE="$1"

|

||||

|

||||

if [ ! -f "$CONFIG_FILE" ]; then

|

||||

echo "Error: Config file '$CONFIG_FILE' not found"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

# Load config file

|

||||

source "$CONFIG_FILE"

|

||||

|

||||

# Validate required fields

|

||||

if [ -z "$GIT_URL" ]; then

|

||||

echo "Error: git-url is required"

|

||||

echo "Error: GIT_URL not defined in config file"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

if [ -z "$GIT_USER" ]; then

|

||||

echo "Error: git-user is required"

|

||||

echo "Error: GIT_USER not defined in config file"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

if [ -z "$GIT_TOKEN" ]; then

|

||||

echo "Error: git-token is required"

|

||||

echo "Error: GIT_TOKEN not defined in config file"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

# Read version from VERSION file (first line)

|

||||

if [ ! -f "VERSION" ]; then

|

||||

echo "Error: VERSION file not found in current directory"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

GIT_BRANCH=$(head -n 1 VERSION | tr -d '[:space:]')

|

||||

GIT_BRANCH=$(head -n 1 VERSION)

|

||||

|

||||

if [ -z "$GIT_BRANCH" ]; then

|

||||

echo "Error: VERSION file is empty"

|

||||

|

|

@ -56,6 +65,8 @@ fi

|

|||

|

||||

echo "Version detected: $GIT_BRANCH"

|

||||

|

||||

# Build authenticated URL

|

||||

# Extract protocol and rest of URL

|

||||

if [[ "$GIT_URL" == https://* ]]; then

|

||||

URL_WITHOUT_PROTOCOL="${GIT_URL#https://}"

|

||||

AUTH_URL="https://${GIT_USER}:${GIT_TOKEN}@${URL_WITHOUT_PROTOCOL}"

|

||||

|

|

@ -88,3 +99,4 @@ git push -u origin "$GIT_BRANCH"

|

|||

|

||||

echo ""

|

||||

echo "Done! Repository pushed to $GIT_URL on branch $GIT_BRANCH"

|

||||

|

||||

|

|

|

|||

Binary file not shown.

|

|

@ -1,590 +0,0 @@

|

|||

Metadata-Version: 2.4

|

||||

Name: airium

|

||||

Version: 0.2.7

|

||||

Summary: Easy and quick html builder with natural syntax correspondence (python->html). No templates needed. Serves pure pythonic library with no dependencies.

|

||||

Home-page: https://gitlab.com/kamichal/airium

|

||||

Author: Michał Kaczmarczyk

|

||||

Author-email: michal.s.kaczmarczyk@gmail.com

|

||||

Maintainer: Michał Kaczmarczyk

|

||||

Maintainer-email: michal.s.kaczmarczyk@gmail.com

|

||||

License: MIT

|

||||

Keywords: natural html generator compiler template-less

|

||||

Classifier: Development Status :: 4 - Beta

|

||||

Classifier: Intended Audience :: Developers

|

||||

Classifier: Intended Audience :: Information Technology

|

||||

Classifier: Intended Audience :: Science/Research

|

||||

Classifier: Intended Audience :: System Administrators

|

||||

Classifier: Intended Audience :: Telecommunications Industry

|

||||

Classifier: Operating System :: OS Independent

|

||||

Classifier: Programming Language :: Python :: 3

|

||||

Classifier: Programming Language :: Python :: 3.8

|

||||

Classifier: Programming Language :: Python :: 3.9

|

||||

Classifier: Programming Language :: Python :: 3.10

|

||||

Classifier: Programming Language :: Python :: 3.11

|

||||

Classifier: Programming Language :: Python :: 3.12

|

||||

Classifier: Programming Language :: Python :: 3.13

|

||||

Classifier: Programming Language :: Python :: Implementation :: PyPy

|

||||

Classifier: Programming Language :: Python

|

||||

Classifier: Topic :: Database :: Front-Ends

|

||||

Classifier: Topic :: Documentation

|

||||

Classifier: Topic :: Internet :: WWW/HTTP :: Browsers

|

||||

Classifier: Topic :: Internet :: WWW/HTTP :: Dynamic Content

|

||||

Classifier: Topic :: Internet :: WWW/HTTP

|

||||

Classifier: Topic :: Scientific/Engineering :: Visualization

|

||||

Classifier: Topic :: Software Development :: Code Generators

|

||||

Classifier: Topic :: Text Processing :: Markup :: HTML

|

||||

Classifier: Topic :: Utilities

|

||||

Description-Content-Type: text/markdown

|

||||

License-File: LICENSE

|

||||

Provides-Extra: dev

|

||||

Requires-Dist: check-manifest; extra == "dev"

|

||||

Requires-Dist: flake8~=7.1; extra == "dev"

|

||||

Requires-Dist: mypy~=1.10; extra == "dev"

|

||||

Requires-Dist: pytest-cov~=3.0; extra == "dev"

|

||||

Requires-Dist: pytest-mock~=3.6; extra == "dev"

|

||||

Requires-Dist: pytest~=6.2; extra == "dev"

|

||||

Requires-Dist: types-beautifulsoup4~=4.12; extra == "dev"

|

||||

Requires-Dist: types-requests~=2.32; extra == "dev"

|

||||

Provides-Extra: parse

|

||||

Requires-Dist: requests<3,>=2.12.0; extra == "parse"

|

||||

Requires-Dist: beautifulsoup4<5.0,>=4.10.0; extra == "parse"

|

||||

Dynamic: author

|

||||

Dynamic: author-email

|

||||

Dynamic: classifier

|

||||

Dynamic: description

|

||||

Dynamic: description-content-type

|

||||

Dynamic: home-page

|

||||

Dynamic: keywords

|

||||

Dynamic: license

|

||||

Dynamic: license-file

|

||||

Dynamic: maintainer

|

||||

Dynamic: maintainer-email

|

||||

Dynamic: provides-extra

|

||||

Dynamic: summary

|

||||

|

||||

## Airium

|

||||

|

||||

Bidirectional `HTML`-`python` translator.

|

||||

|

||||

[](https://pypi.python.org/pypi/airium/)

|

||||

[](https://gitlab.com/kamichal/airium/-/commits/master)

|

||||

[](https://gitlab.com/kamichal/airium/-/commits/master)

|

||||

[](https://pypi.org/project/airium/)

|

||||

[](https://pypi.python.org/pypi/airium/)

|

||||

[](https://pypi.python.org/pypi/airium/)

|

||||

|

||||

Key features:

|

||||

|

||||

- simple, straight-forward

|

||||

- template-less (just the python, you may say goodbye to all the templates)

|

||||

- DOM structure is strictly represented by python indentation (with context-managers)

|

||||

- gives much cleaner `HTML` than regular templates

|

||||

- equipped with reverse translator: `HTML` to python

|

||||

- can output either pretty (default) or minified `HTML` code

|

||||

|

||||

# Generating `HTML` code in python using `airium`

|

||||

|

||||

#### Basic `HTML` page (hello world)

|

||||

|

||||

```python

|

||||

from airium import Airium

|

||||

|

||||

a = Airium()

|

||||

|

||||

a('<!DOCTYPE html>')

|

||||

with a.html(lang="pl"):

|

||||

with a.head():

|

||||

a.meta(charset="utf-8")

|

||||

a.title(_t="Airium example")

|

||||

|

||||

with a.body():

|

||||

with a.h3(id="id23409231", klass='main_header'):

|

||||

a("Hello World.")

|

||||

|

||||

html = str(a) # casting to string extracts the value

|

||||

# or directly to UTF-8 encoded bytes:

|

||||

html_bytes = bytes(a) # casting to bytes is a shortcut to str(a).encode('utf-8')

|

||||

|

||||

print(html)

|

||||

```

|

||||

|

||||

Prints such a string:

|

||||

|

||||

```html

|

||||

<!DOCTYPE html>

|

||||

<html lang="pl">

|

||||

<head>

|

||||

<meta charset="utf-8" />

|

||||

<title>Airium example</title>

|

||||

</head>

|

||||

<body>

|

||||

<h3 id="id23409231" class="main_header">

|

||||

Hello World.

|

||||

</h3>

|

||||

</body>

|

||||

</html>

|

||||

```

|

||||

|

||||

In order to store it as a file, just:

|

||||

|

||||

```python

|

||||

with open('that/file/path.html', 'wb') as f:

|

||||

f.write(bytes(html))

|

||||

```

|

||||

|

||||

#### Simple image in a div

|

||||

|

||||

```python

|

||||

from airium import Airium

|

||||

|

||||

a = Airium()

|

||||

|

||||

with a.div():

|

||||

a.img(src='source.png', alt='alt text')

|

||||

a('the text')

|

||||

|

||||

html_str = str(a)

|

||||

print(html_str)

|

||||

```

|

||||

|

||||

```html

|

||||

|

||||

<div>

|

||||

<img src="source.png" alt="alt text"/>

|

||||

the text

|

||||

</div>

|

||||

```

|

||||

|

||||

#### Table

|

||||

|

||||

```python

|

||||

from airium import Airium

|

||||

|

||||

a = Airium()

|

||||

|

||||

with a.table(id='table_372'):

|

||||

with a.tr(klass='header_row'):

|

||||

a.th(_t='no.')

|

||||

a.th(_t='Firstname')

|

||||

a.th(_t='Lastname')

|

||||

|

||||

with a.tr():

|

||||

a.td(_t='1.')

|

||||

a.td(id='jbl', _t='Jill')

|

||||

a.td(_t='Smith') # can use _t or text

|

||||

|

||||

with a.tr():

|

||||

a.td(_t='2.')

|

||||

a.td(_t='Roland', id='rmd')

|

||||

a.td(_t='Mendel')

|

||||

|

||||

table_str = str(a)

|

||||

print(table_str)

|

||||

|

||||

# To store it to a file:

|

||||

with open('/tmp/airium_www.example.com.py') as f:

|

||||

f.write(table_str)

|

||||

```

|

||||

|

||||

Now `table_str` contains such a string:

|

||||

|

||||

```html

|

||||

|

||||

<table id="table_372">

|

||||

<tr class="header_row">

|

||||

<th>no.</th>

|

||||

<th>Firstname</th>

|

||||

<th>Lastname</th>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>1.</td>

|

||||

<td id="jbl">Jill</td>

|

||||

<td>Smith</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>2.</td>

|

||||

<td id="rmd">Roland</td>

|

||||

<td>Mendel</td>

|

||||

</tr>

|

||||

</table>

|

||||

```

|

||||

|

||||

### Chaining shortcut for elements with only one child

|

||||

|

||||

_New in version 0.2.2_

|

||||

|

||||

Having a structure with large number of `with` statements:

|

||||

|

||||

```python

|

||||

from airium import Airium

|

||||

|

||||

a = Airium()

|

||||

|

||||

with a.article():

|

||||

with a.table():

|

||||

with a.thead():

|

||||

with a.tr():

|

||||

a.th(_t='Column 1')

|

||||

a.th(_t='Column 2')

|

||||

with a.tbody():

|

||||

with a.tr():

|

||||

with a.td():

|

||||

a.strong(_t='Value 1')

|

||||

a.td(_t='Value 2')

|

||||

|

||||

table_str = str(a)

|

||||

print(table_str)

|

||||

```

|

||||

|

||||

You may use a shortcut that is equivalent to:

|

||||

|

||||

```python

|

||||

from airium import Airium

|

||||

|

||||

a = Airium()

|

||||

|

||||

with a.article().table():

|

||||

with a.thead().tr():

|

||||

a.th(_t="Column 1")

|

||||

a.th(_t="Column 2")

|

||||

with a.tbody().tr():

|

||||

a.td().strong(_t="Value 1")

|

||||

a.td(_t="Value 2")

|

||||

|

||||

table_str = str(a)

|

||||

print(table_str)

|

||||

```

|

||||

|

||||

```html

|

||||

|

||||

<article>

|

||||

<table>

|

||||

<thead>

|

||||

<tr>

|

||||

<th>Column 1</th>

|

||||

<th>Column 2</th>

|

||||

</tr>

|

||||

</thead>

|

||||

<tbody>

|

||||

<tr>

|

||||

<td>

|

||||

<strong>Value 1</strong>

|

||||

</td>

|

||||

<td>Value 2</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

</table>

|

||||

</article>

|

||||

```

|

||||

|

||||

# Options

|

||||

|

||||

### Pretty or Minify

|

||||

|

||||

By default, airium biulds `HTML` code indented with spaces and with line breaks being line feed `\n` characters.

|

||||

It can be changed while creating an `Airium` instance. In general all avaliable arguments whit their default values are:

|

||||

|

||||

```python

|

||||

a = Airium(

|

||||

base_indent=' ', # str

|

||||

current_level=0, # int

|

||||

source_minify=False, # bool

|

||||

source_line_break_character="\n", # str

|

||||

)

|

||||

```

|

||||

|

||||

#### minify

|

||||

|

||||

That's a mode when size of the code is minimized, i.e. contains as less whitespaces as it's possible.

|

||||

The option can be enabled with `source_minify` argument, i.e.:

|

||||

|

||||

```python

|

||||

a = Airium(source_minify=True)

|

||||

```

|

||||

|

||||

In case if you need to explicitly add a line break in the source code (not the `<br/>`):

|

||||

|

||||

```python

|

||||

a = Airium(source_minify=True)

|

||||

a.h1(_t="Here's your table")

|

||||

with a.table():

|

||||

with a.tr():

|

||||

a.break_source_line()

|

||||

a.th(_t="Cell 11")

|

||||

a.th(_t="Cell 12")

|

||||

with a.tr():

|

||||

a.break_source_line()

|

||||

a.th(_t="Cell 21")

|

||||

a.th(_t="Cell 22")

|

||||

a.break_source_line()

|

||||

a.p(_t="Another content goes here")

|

||||

```

|

||||

|

||||

Will result with such a code:

|

||||

|

||||

```html

|

||||

<h1>Here's your table</h1><table><tr>

|

||||

<th>Cell 11</th><th>Cell 12</th></tr><tr>

|

||||

<th>Cell 21</th><th>Cell 22</th></tr>

|

||||

</table><p>Another content goes here</p>

|

||||

```

|

||||

|

||||

Note that the `break_source_line` cannot be used

|

||||

in [context manager chains](#chaining-shortcut-for-elements-with-only-one-child).

|

||||

|

||||

#### indent style

|

||||

|

||||

The default indent of the generated HTML code has two spaces per each indent level.

|

||||

You can change it to `\t` or 4 spaces by setting `Airium` constructor argument, e.g.:

|

||||

|

||||

```python

|

||||

a = Airium(base_indent="\t") # one tab symbol

|

||||

a = Airium(base_indent=" ") # 4 spaces per each indentation level

|

||||

a = Airium(base_indent=" ") # 1 space per one level

|

||||

# pick one of the above statements, it can be mixed with other arguments

|

||||

```

|

||||

|

||||

Note that this setting is ignored when `source_minify` argument is set to `True` (see above).

|

||||

|

||||

There is a special case when you set the base indent to empty string. It would disable indentation,

|

||||

but line breaks will be still added. In order to get rid of line breaks, check the `source_minify` argument.

|

||||

|

||||

#### indent level

|

||||

|

||||

The `current_level` being an integer can be set to non-negative

|

||||

value, wich will cause `airium` to start indentation with level offset given by the number.

|

||||

|

||||

#### line break character

|

||||

|

||||

By default, just a line feed (`\n`) is used for terminating lines of the generated code.

|

||||

You can change it to different style, e.g. `\r\n` or `\r` by setting `source_line_break_character` to the desired value.

|

||||

|

||||

```python

|

||||

a = Airium(source_line_break_character="\r\n") # windows' style

|

||||

```

|

||||

|

||||

Note that the setting has no effect when `source_minify` argument is set to `True` (see above).

|

||||

|

||||

# Using airium with web-frameworks

|

||||

|

||||

Airium can be used with frameworks like Flask or Django. It can completely replace

|

||||

template engines, reducing code-files scater, which may bring better code organization, and some other reasons.

|

||||

|

||||

Here is an example of using airium with django. It implements reusable `basic_body` and a view called `index`.

|

||||

|

||||

```python

|

||||

# file: your_app/views.py

|

||||

import contextlib

|

||||

import inspect

|

||||

|

||||

from airium import Airium

|

||||

from django.http import HttpResponse

|

||||

|

||||

|

||||

@contextlib.contextmanager

|

||||

def basic_body(a: Airium, useful_name: str = ''):

|

||||

"""Works like a Django/Ninja template."""

|

||||

|

||||

a('<!DOCTYPE html>')

|

||||

with a.html(lang='en'):

|

||||

with a.head():

|

||||

a.meta(charset='utf-8')

|

||||

a.meta(content='width=device-width, initial-scale=1', name='viewport')

|

||||

# do not use CSS from this URL in a production, it's just for an educational purpose

|

||||

a.link(href='https://unpkg.com/@picocss/pico@1.4.1/css/pico.css', rel='stylesheet')

|

||||

a.title(_t=f'Hello World')

|

||||

|

||||

with a.body():

|

||||

with a.div():

|

||||

with a.nav(klass='container-fluid'):

|

||||

with a.ul():

|

||||

with a.li():

|

||||

with a.a(klass='contrast', href='./'):

|

||||

a.strong(_t="⌨ Foo Bar")

|

||||

with a.ul():

|

||||

with a.li():

|

||||

a.a(klass='contrast', href='#', **{'data-theme-switcher': 'auto'}, _t='Auto')

|

||||

with a.li():

|

||||

a.a(klass='contrast', href='#', **{'data-theme-switcher': 'light'}, _t='Light')

|

||||

with a.li():

|

||||

a.a(klass='contrast', href='#', **{'data-theme-switcher': 'dark'}, _t='Dark')

|

||||

|

||||

with a.header(klass='container'):

|

||||

with a.hgroup():

|

||||

a.h1(_t=f"You're on the {useful_name}")

|

||||

a.h2(_t="It's a page made by our automatons with a power of steam engines.")

|

||||

|

||||

with a.main(klass='container'):

|

||||

yield # This is the point where main content gets inserted

|

||||

|

||||

with a.footer(klass='container'):

|

||||

with a.small():

|

||||

margin = 'margin: auto 10px;'

|

||||

a.span(_t='© Airium HTML generator example', style=margin)

|

||||

|

||||

# do not use JS from this URL in a production, it's just for an educational purpose

|

||||

a.script(src='https://picocss.com/examples/js/minimal-theme-switcher.js')

|

||||

|

||||

|

||||

def index(request) -> HttpResponse:

|

||||

a = Airium()

|

||||

with basic_body(a, f'main page: {request.path}'):

|

||||

with a.article():

|

||||

a.h3(_t="Hello World from Django running Airium")

|

||||

with a.p().small():

|

||||

a("This bases on ")

|

||||

with a.a(href="https://picocss.com/examples/company/"):

|

||||

a("Pico.css / Company example")

|

||||

|

||||

with a.p():

|

||||

a("Instead of a HTML template, airium has been used.")

|

||||

a("The whole body is generated by a template "

|

||||

"and the article code looks like that:")

|

||||

|

||||

with a.code().pre():

|

||||

a(inspect.getsource(index))

|

||||

|

||||

return HttpResponse(bytes(a)) # from django.http import HttpResponse

|

||||

```

|

||||

|

||||

Route it in `urls.py` just like a regular view:

|

||||

|

||||

```python

|

||||

# file: your_app/urls.py

|

||||

from django.contrib import admin

|

||||

from django.urls import path

|

||||

|

||||

import your_app

|

||||

|

||||

urlpatterns = [

|

||||

path('index/', your_app.views.index),

|

||||

path('admin/', admin.site.urls),

|

||||

]

|

||||

```

|

||||

|

||||

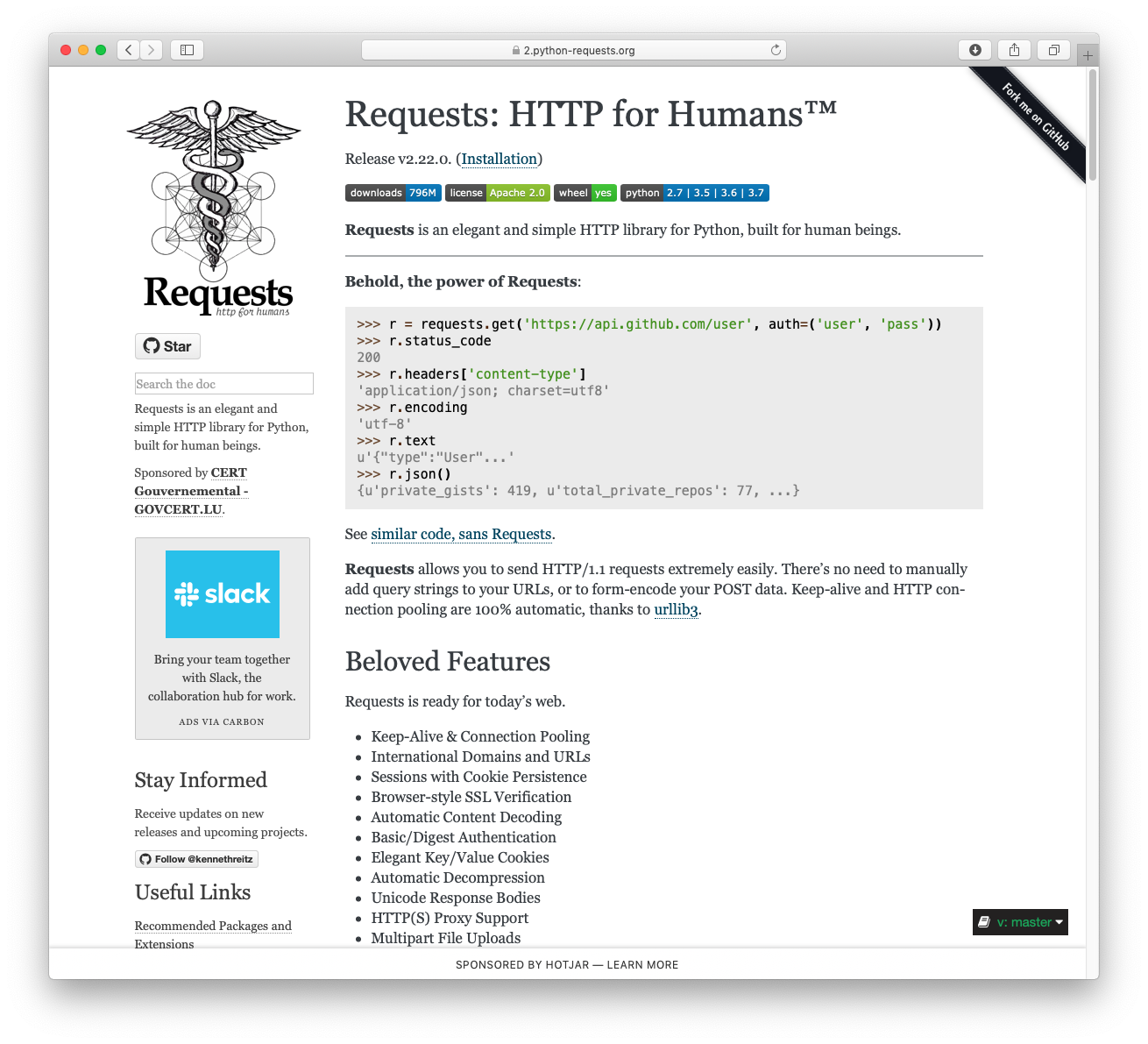

The result ing web page on my machine looks like that:

|

||||

|

||||

|

||||

|

||||

# Reverse translation

|

||||

|

||||

Airium is equipped with a transpiler `[HTML -> py]`.

|

||||

It generates python code out of a given `HTML` string.

|

||||

|

||||

### Using reverse translator as a binary:

|

||||

|

||||

Ensure you have [installed](#installation) `[parse]` extras. Then call in command line:

|

||||

|

||||

```bash

|

||||

airium http://www.example.com

|

||||

```

|

||||

|

||||

That will fetch the document and translate it to python code.

|

||||

The code calls `airium` statements that reproduce the `HTML` document given.

|

||||

It may give a clue - how to define `HTML` structure for a given

|

||||

web page using `airium` package.

|

||||

|

||||

To store the translation's result into a file:

|

||||

|

||||

```bash

|

||||

airium http://www.example.com > /tmp/airium_example_com.py

|

||||

```

|

||||

|

||||

You can also parse local `HTML` files:

|

||||

|

||||

```bash

|

||||

airium /path/to/your_file.html > /tmp/airium_my_file.py

|

||||

```

|

||||

|

||||

You may also try to parse your Django templates. I'm not sure if it works,

|

||||

but there will be probably not much to fix.

|

||||

|

||||

### Using reverse translator as python code:

|

||||

|

||||

```python

|

||||

from airium import from_html_to_airium

|

||||

|

||||

# assume we have such a page given as a string:

|

||||

html_str = """\

|

||||

<!DOCTYPE html>

|

||||

<html lang="pl">

|

||||

<head>

|

||||

<meta charset="utf-8" />

|

||||

<title>Airium example</title>

|

||||

</head>

|

||||

<body>

|

||||

<h3 id="id23409231" class="main_header">

|

||||

Hello World.

|

||||

</h3>

|

||||

</body>

|

||||

</html>

|

||||

"""

|

||||

|

||||

# to convert the html into python, just call:

|

||||

|

||||

py_str = from_html_to_airium(html_str)

|

||||

|

||||

# airium tests ensure that the result of the conversion is equal to the string:

|

||||

assert py_str == """\

|

||||

#!/usr/bin/env python

|

||||

# File generated by reverse AIRIUM translator (version 0.2.7).

|

||||

# Any change will be overridden on next run.

|

||||

# flake8: noqa E501 (line too long)

|

||||

|

||||

from airium import Airium

|

||||

|

||||

a = Airium()

|

||||

|

||||

a('<!DOCTYPE html>')

|

||||

with a.html(lang='pl'):

|

||||

with a.head():

|

||||

a.meta(charset='utf-8')

|

||||

a.title(_t='Airium example')

|

||||

with a.body():

|

||||

a.h3(klass='main_header', id='id23409231', _t='Hello World.')

|

||||

"""

|

||||

```

|

||||

|

||||

### <a name="transpiler_limitations">Transpiler limitations</a>

|

||||

|

||||

> so far in version 0.2.2:

|

||||

|

||||

- result of translation does not keep exact amount of leading whitespaces

|

||||

within `<pre>` tags. They come over-indented in python code.

|

||||

|

||||

This is not however an issue when code is generated from python to `HTML`.

|

||||

|

||||

- although it keeps the proper tags structure, the transpiler does not

|

||||

chain all the `with` statements, so in some cases the generated

|

||||

code may be much indented.

|

||||

|

||||

- it's not too fast

|

||||

|

||||

# <a name="installation">Installation</a>

|

||||

|

||||

If you need a new virtual environment, call:

|

||||

|

||||

```bash

|

||||

virtualenv venv

|

||||

source venv/bin/activate

|

||||

```

|

||||

|

||||

Having it activated - you may install airium like this:

|

||||

|

||||

```bash

|

||||

pip install airium

|

||||

```

|

||||

|

||||

In order to use reverse translation - two additional packages are needed, run:

|

||||

|

||||

```bash

|

||||

pip install airium[parse]

|

||||

```

|

||||

|

||||

Then check if the transpiler works by calling:

|

||||

|

||||

```bash

|

||||

airium --help

|

||||

```

|

||||

|

||||

> Enjoy!

|

||||

|

|

@ -1,20 +0,0 @@

|

|||

<!DOCTYPE html>

|

||||

<html lang="en">

|

||||

<head>

|

||||

<meta name="generator" content="simple503 version 0.4.0" />

|

||||

<meta name="pypi:repository-version" content="1.0" />

|

||||

<meta charset="UTF-8" />

|

||||

<title>

|

||||

Links for airium

|

||||

</title>

|

||||

</head>

|

||||

<body>

|

||||

<h1>

|

||||

Links for airium

|

||||

</h1>

|

||||

<a href="/airium/airium-0.2.7-py3-none-any.whl#sha256=35e3ae334327b17b7c2fc39bb57ab2c48171ca849f8cf3dff11437d1e054952e" data-dist-info-metadata="sha256=48022884c676a59c85113445ae9e14ad7f149808fb5d62c2660f8c4567489fe5">

|

||||

airium-0.2.7-py3-none-any.whl

|

||||

</a>

|

||||

<br />

|

||||

</body>

|

||||

</html>

|

||||

Binary file not shown.

|

|

@ -1,187 +0,0 @@

|

|||

Metadata-Version: 2.1

|

||||

Name: apeye-core

|

||||

Version: 1.1.5

|

||||

Summary: Core (offline) functionality for the apeye library.

|

||||

Project-URL: Homepage, https://github.com/domdfcoding/apeye-core

|

||||

Project-URL: Issue Tracker, https://github.com/domdfcoding/apeye-core/issues

|

||||

Project-URL: Source Code, https://github.com/domdfcoding/apeye-core

|

||||

Author-email: Dominic Davis-Foster <dominic@davis-foster.co.uk>

|

||||

License: Copyright (c) 2022, Dominic Davis-Foster

|

||||

|

||||

Redistribution and use in source and binary forms, with or without modification,

|

||||

are permitted provided that the following conditions are met:

|

||||

|

||||

* Redistributions of source code must retain the above copyright notice,

|

||||

this list of conditions and the following disclaimer.

|

||||

* Redistributions in binary form must reproduce the above copyright notice,

|

||||

this list of conditions and the following disclaimer in the documentation

|

||||

and/or other materials provided with the distribution.

|

||||

* Neither the name of the copyright holder nor the names of its contributors

|

||||

may be used to endorse or promote products derived from this software without

|

||||

specific prior written permission.

|

||||

|

||||

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

|

||||

"AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

|

||||

LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR

|

||||

A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER

|

||||

OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL,

|

||||

EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO,

|

||||

PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR

|

||||

PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF

|

||||

LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING

|

||||

NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS

|

||||

SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

||||

License-File: LICENSE

|

||||

Keywords: url

|

||||

Classifier: Development Status :: 5 - Production/Stable

|

||||

Classifier: Intended Audience :: Developers

|

||||

Classifier: License :: OSI Approved :: BSD License

|

||||

Classifier: Operating System :: OS Independent

|

||||

Classifier: Programming Language :: Python

|

||||

Classifier: Programming Language :: Python :: 3 :: Only

|

||||

Classifier: Programming Language :: Python :: 3.6

|

||||

Classifier: Programming Language :: Python :: 3.7

|

||||

Classifier: Programming Language :: Python :: 3.8

|

||||

Classifier: Programming Language :: Python :: 3.9

|

||||

Classifier: Programming Language :: Python :: 3.10

|

||||

Classifier: Programming Language :: Python :: 3.11

|

||||

Classifier: Programming Language :: Python :: 3.12

|

||||

Classifier: Programming Language :: Python :: Implementation :: CPython

|

||||

Classifier: Programming Language :: Python :: Implementation :: PyPy

|

||||

Classifier: Topic :: Software Development :: Libraries :: Python Modules

|

||||

Classifier: Typing :: Typed

|

||||

Requires-Python: >=3.6.1

|

||||

Requires-Dist: domdf-python-tools>=2.6.0

|

||||

Requires-Dist: idna>=2.5

|

||||

Description-Content-Type: text/x-rst

|

||||

|

||||

===========

|

||||

apeye-core

|

||||

===========

|

||||

|

||||

.. start short_desc

|

||||

|

||||

**Core (offline) functionality for the apeye library.**

|

||||

|

||||

.. end short_desc

|

||||

|

||||

|

||||

.. start shields

|

||||

|

||||

.. list-table::

|

||||

:stub-columns: 1

|

||||

:widths: 10 90

|

||||

|

||||

* - Tests

|

||||

- |actions_linux| |actions_windows| |actions_macos| |coveralls|

|

||||

* - PyPI

|

||||

- |pypi-version| |supported-versions| |supported-implementations| |wheel|

|

||||

* - Anaconda

|

||||

- |conda-version| |conda-platform|

|

||||

* - Activity

|

||||

- |commits-latest| |commits-since| |maintained| |pypi-downloads|

|

||||

* - QA

|

||||

- |codefactor| |actions_flake8| |actions_mypy|

|

||||

* - Other

|

||||

- |license| |language| |requires|

|

||||

|

||||

.. |actions_linux| image:: https://github.com/domdfcoding/apeye-core/workflows/Linux/badge.svg

|

||||

:target: https://github.com/domdfcoding/apeye-core/actions?query=workflow%3A%22Linux%22

|

||||

:alt: Linux Test Status

|

||||

|

||||

.. |actions_windows| image:: https://github.com/domdfcoding/apeye-core/workflows/Windows/badge.svg

|

||||

:target: https://github.com/domdfcoding/apeye-core/actions?query=workflow%3A%22Windows%22

|

||||

:alt: Windows Test Status

|

||||

|

||||

.. |actions_macos| image:: https://github.com/domdfcoding/apeye-core/workflows/macOS/badge.svg

|

||||

:target: https://github.com/domdfcoding/apeye-core/actions?query=workflow%3A%22macOS%22

|

||||

:alt: macOS Test Status

|

||||

|

||||

.. |actions_flake8| image:: https://github.com/domdfcoding/apeye-core/workflows/Flake8/badge.svg

|

||||

:target: https://github.com/domdfcoding/apeye-core/actions?query=workflow%3A%22Flake8%22

|

||||

:alt: Flake8 Status

|

||||

|

||||

.. |actions_mypy| image:: https://github.com/domdfcoding/apeye-core/workflows/mypy/badge.svg

|

||||

:target: https://github.com/domdfcoding/apeye-core/actions?query=workflow%3A%22mypy%22

|

||||

:alt: mypy status

|

||||

|

||||

.. |requires| image:: https://dependency-dash.repo-helper.uk/github/domdfcoding/apeye-core/badge.svg

|

||||

:target: https://dependency-dash.repo-helper.uk/github/domdfcoding/apeye-core/

|

||||

:alt: Requirements Status

|

||||

|

||||

.. |coveralls| image:: https://img.shields.io/coveralls/github/domdfcoding/apeye-core/master?logo=coveralls

|

||||

:target: https://coveralls.io/github/domdfcoding/apeye-core?branch=master

|

||||

:alt: Coverage

|

||||

|

||||

.. |codefactor| image:: https://img.shields.io/codefactor/grade/github/domdfcoding/apeye-core?logo=codefactor

|

||||

:target: https://www.codefactor.io/repository/github/domdfcoding/apeye-core

|

||||

:alt: CodeFactor Grade

|

||||

|

||||

.. |pypi-version| image:: https://img.shields.io/pypi/v/apeye-core

|

||||

:target: https://pypi.org/project/apeye-core/

|

||||

:alt: PyPI - Package Version

|

||||

|

||||

.. |supported-versions| image:: https://img.shields.io/pypi/pyversions/apeye-core?logo=python&logoColor=white

|

||||

:target: https://pypi.org/project/apeye-core/

|

||||

:alt: PyPI - Supported Python Versions

|

||||

|

||||

.. |supported-implementations| image:: https://img.shields.io/pypi/implementation/apeye-core

|

||||

:target: https://pypi.org/project/apeye-core/

|

||||

:alt: PyPI - Supported Implementations

|

||||

|

||||

.. |wheel| image:: https://img.shields.io/pypi/wheel/apeye-core

|

||||

:target: https://pypi.org/project/apeye-core/

|

||||

:alt: PyPI - Wheel

|

||||

|

||||

.. |conda-version| image:: https://img.shields.io/conda/v/conda-forge/apeye-core?logo=anaconda

|

||||

:target: https://anaconda.org/conda-forge/apeye-core

|

||||

:alt: Conda - Package Version

|

||||

|

||||

.. |conda-platform| image:: https://img.shields.io/conda/pn/conda-forge/apeye-core?label=conda%7Cplatform

|

||||

:target: https://anaconda.org/conda-forge/apeye-core

|

||||

:alt: Conda - Platform

|

||||

|

||||

.. |license| image:: https://img.shields.io/github/license/domdfcoding/apeye-core

|

||||

:target: https://github.com/domdfcoding/apeye-core/blob/master/LICENSE

|

||||

:alt: License

|

||||

|

||||

.. |language| image:: https://img.shields.io/github/languages/top/domdfcoding/apeye-core

|

||||

:alt: GitHub top language

|

||||

|

||||

.. |commits-since| image:: https://img.shields.io/github/commits-since/domdfcoding/apeye-core/v1.1.5

|

||||

:target: https://github.com/domdfcoding/apeye-core/pulse

|

||||

:alt: GitHub commits since tagged version

|

||||

|

||||

.. |commits-latest| image:: https://img.shields.io/github/last-commit/domdfcoding/apeye-core

|

||||

:target: https://github.com/domdfcoding/apeye-core/commit/master

|

||||

:alt: GitHub last commit

|

||||

|

||||

.. |maintained| image:: https://img.shields.io/maintenance/yes/2024

|

||||

:alt: Maintenance

|

||||

|

||||

.. |pypi-downloads| image:: https://img.shields.io/pypi/dm/apeye-core

|

||||

:target: https://pypi.org/project/apeye-core/

|

||||

:alt: PyPI - Downloads

|

||||

|

||||

.. end shields

|

||||

|

||||

Installation

|

||||

--------------

|

||||

|

||||

.. start installation

|

||||

|

||||

``apeye-core`` can be installed from PyPI or Anaconda.

|

||||

|

||||

To install with ``pip``:

|

||||

|

||||

.. code-block:: bash

|

||||

|

||||

$ python -m pip install apeye-core

|

||||

|

||||

To install with ``conda``:

|

||||

|

||||

.. code-block:: bash

|

||||

|

||||

$ conda install -c conda-forge apeye-core

|

||||

|

||||

.. end installation

|

||||

|

|

@ -1,20 +0,0 @@

|

|||

<!DOCTYPE html>

|

||||

<html lang="en">

|

||||

<head>

|

||||

<meta name="generator" content="simple503 version 0.4.0" />

|

||||

<meta name="pypi:repository-version" content="1.0" />

|

||||

<meta charset="UTF-8" />

|

||||

<title>

|

||||

Links for apeye-core

|

||||

</title>

|

||||

</head>

|

||||

<body>

|

||||

<h1>

|

||||

Links for apeye-core

|

||||

</h1>

|

||||

<a href="/apeye-core/apeye_core-1.1.5-py3-none-any.whl#sha256=dc27a93f8c9e246b3b238c5ea51edf6115ab2618ef029b9f2d9a190ec8228fbf" data-requires-python=">=3.6.1" data-dist-info-metadata="sha256=751bbcd20a27f156c12183849bc78419fbac8ca5a51c29fb5137e01e6aeb5e78">

|

||||

apeye_core-1.1.5-py3-none-any.whl

|

||||

</a>

|

||||

<br />

|

||||

</body>

|

||||

</html>

|

||||

Binary file not shown.

|

|

@ -1,210 +0,0 @@

|

|||

Metadata-Version: 2.1

|

||||

Name: apeye

|

||||

Version: 1.4.1

|

||||

Summary: Handy tools for working with URLs and APIs.

|

||||

Keywords: api,cache,requests,rest,url

|

||||

Author-email: Dominic Davis-Foster <dominic@davis-foster.co.uk>

|

||||

Requires-Python: >=3.6.1

|

||||

Description-Content-Type: text/x-rst

|

||||

Classifier: Development Status :: 5 - Production/Stable

|

||||

Classifier: Intended Audience :: Developers

|

||||

Classifier: License :: OSI Approved :: GNU Lesser General Public License v3 or later (LGPLv3+)

|

||||

Classifier: Operating System :: OS Independent

|

||||

Classifier: Programming Language :: Python

|

||||

Classifier: Programming Language :: Python :: 3 :: Only

|

||||

Classifier: Programming Language :: Python :: 3.6

|

||||

Classifier: Programming Language :: Python :: 3.7

|

||||

Classifier: Programming Language :: Python :: 3.8

|

||||

Classifier: Programming Language :: Python :: 3.9

|

||||

Classifier: Programming Language :: Python :: 3.10

|

||||

Classifier: Programming Language :: Python :: 3.11

|

||||

Classifier: Programming Language :: Python :: Implementation :: CPython

|

||||

Classifier: Programming Language :: Python :: Implementation :: PyPy

|

||||

Classifier: Topic :: Internet :: WWW/HTTP

|

||||

Classifier: Topic :: Software Development :: Libraries :: Python Modules

|

||||

Classifier: Typing :: Typed

|

||||

Requires-Dist: apeye-core>=1.0.0b2

|

||||

Requires-Dist: domdf-python-tools>=2.6.0

|

||||

Requires-Dist: platformdirs>=2.3.0

|

||||

Requires-Dist: requests>=2.24.0

|

||||

Requires-Dist: cachecontrol[filecache]>=0.12.6 ; extra == "all"

|

||||

Requires-Dist: lockfile>=0.12.2 ; extra == "all"

|

||||

Requires-Dist: cachecontrol[filecache]>=0.12.6 ; extra == "limiter"

|

||||

Requires-Dist: lockfile>=0.12.2 ; extra == "limiter"

|

||||

Project-URL: Documentation, https://apeye.readthedocs.io/en/latest

|

||||

Project-URL: Homepage, https://github.com/domdfcoding/apeye

|

||||

Project-URL: Issue Tracker, https://github.com/domdfcoding/apeye/issues

|

||||

Project-URL: Source Code, https://github.com/domdfcoding/apeye

|

||||

Provides-Extra: all

|

||||

Provides-Extra: limiter

|

||||

|

||||

======

|

||||

apeye

|

||||

======

|

||||

|

||||

.. start short_desc

|

||||

|

||||

**Handy tools for working with URLs and APIs.**

|

||||

|

||||

.. end short_desc

|

||||

|

||||

|

||||

.. start shields

|

||||

|

||||

.. list-table::

|

||||

:stub-columns: 1

|

||||

:widths: 10 90

|

||||

|

||||

* - Docs

|

||||

- |docs| |docs_check|

|

||||

* - Tests

|

||||

- |actions_linux| |actions_windows| |actions_macos| |coveralls|

|

||||

* - PyPI

|

||||

- |pypi-version| |supported-versions| |supported-implementations| |wheel|

|

||||

* - Anaconda

|

||||

- |conda-version| |conda-platform|

|

||||

* - Activity

|

||||

- |commits-latest| |commits-since| |maintained| |pypi-downloads|

|

||||

* - QA

|

||||

- |codefactor| |actions_flake8| |actions_mypy|

|

||||

* - Other

|

||||

- |license| |language| |requires|

|

||||

|

||||

.. |docs| image:: https://img.shields.io/readthedocs/apeye/latest?logo=read-the-docs

|

||||

:target: https://apeye.readthedocs.io/en/latest

|

||||

:alt: Documentation Build Status

|

||||

|

||||

.. |docs_check| image:: https://github.com/domdfcoding/apeye/workflows/Docs%20Check/badge.svg

|

||||

:target: https://github.com/domdfcoding/apeye/actions?query=workflow%3A%22Docs+Check%22

|

||||

:alt: Docs Check Status

|

||||

|

||||

.. |actions_linux| image:: https://github.com/domdfcoding/apeye/workflows/Linux/badge.svg

|

||||

:target: https://github.com/domdfcoding/apeye/actions?query=workflow%3A%22Linux%22

|

||||

:alt: Linux Test Status

|

||||

|

||||

.. |actions_windows| image:: https://github.com/domdfcoding/apeye/workflows/Windows/badge.svg

|

||||

:target: https://github.com/domdfcoding/apeye/actions?query=workflow%3A%22Windows%22

|

||||

:alt: Windows Test Status

|

||||

|

||||

.. |actions_macos| image:: https://github.com/domdfcoding/apeye/workflows/macOS/badge.svg

|

||||

:target: https://github.com/domdfcoding/apeye/actions?query=workflow%3A%22macOS%22

|

||||

:alt: macOS Test Status

|

||||

|

||||

.. |actions_flake8| image:: https://github.com/domdfcoding/apeye/workflows/Flake8/badge.svg

|

||||

:target: https://github.com/domdfcoding/apeye/actions?query=workflow%3A%22Flake8%22

|

||||

:alt: Flake8 Status

|

||||

|

||||

.. |actions_mypy| image:: https://github.com/domdfcoding/apeye/workflows/mypy/badge.svg

|

||||

:target: https://github.com/domdfcoding/apeye/actions?query=workflow%3A%22mypy%22

|

||||

:alt: mypy status

|

||||

|

||||

.. |requires| image:: https://dependency-dash.repo-helper.uk/github/domdfcoding/apeye/badge.svg

|

||||

:target: https://dependency-dash.repo-helper.uk/github/domdfcoding/apeye/

|

||||

:alt: Requirements Status

|

||||

|

||||

.. |coveralls| image:: https://img.shields.io/coveralls/github/domdfcoding/apeye/master?logo=coveralls

|

||||

:target: https://coveralls.io/github/domdfcoding/apeye?branch=master

|

||||

:alt: Coverage

|

||||

|

||||

.. |codefactor| image:: https://img.shields.io/codefactor/grade/github/domdfcoding/apeye?logo=codefactor

|

||||

:target: https://www.codefactor.io/repository/github/domdfcoding/apeye

|

||||

:alt: CodeFactor Grade

|

||||

|

||||

.. |pypi-version| image:: https://img.shields.io/pypi/v/apeye

|

||||

:target: https://pypi.org/project/apeye/

|

||||

:alt: PyPI - Package Version

|

||||

|

||||

.. |supported-versions| image:: https://img.shields.io/pypi/pyversions/apeye?logo=python&logoColor=white

|

||||

:target: https://pypi.org/project/apeye/

|

||||

:alt: PyPI - Supported Python Versions

|

||||

|

||||

.. |supported-implementations| image:: https://img.shields.io/pypi/implementation/apeye

|

||||

:target: https://pypi.org/project/apeye/

|

||||

:alt: PyPI - Supported Implementations

|

||||

|

||||

.. |wheel| image:: https://img.shields.io/pypi/wheel/apeye

|

||||

:target: https://pypi.org/project/apeye/

|

||||

:alt: PyPI - Wheel

|

||||

|

||||

.. |conda-version| image:: https://img.shields.io/conda/v/domdfcoding/apeye?logo=anaconda

|

||||

:target: https://anaconda.org/domdfcoding/apeye

|

||||

:alt: Conda - Package Version

|

||||

|

||||

.. |conda-platform| image:: https://img.shields.io/conda/pn/domdfcoding/apeye?label=conda%7Cplatform

|

||||

:target: https://anaconda.org/domdfcoding/apeye

|

||||

:alt: Conda - Platform

|

||||

|

||||

.. |license| image:: https://img.shields.io/github/license/domdfcoding/apeye

|

||||

:target: https://github.com/domdfcoding/apeye/blob/master/LICENSE

|

||||

:alt: License

|

||||

|

||||

.. |language| image:: https://img.shields.io/github/languages/top/domdfcoding/apeye

|

||||

:alt: GitHub top language

|

||||

|

||||

.. |commits-since| image:: https://img.shields.io/github/commits-since/domdfcoding/apeye/v1.4.1

|

||||

:target: https://github.com/domdfcoding/apeye/pulse

|

||||

:alt: GitHub commits since tagged version

|

||||

|

||||

.. |commits-latest| image:: https://img.shields.io/github/last-commit/domdfcoding/apeye

|

||||

:target: https://github.com/domdfcoding/apeye/commit/master

|

||||

:alt: GitHub last commit

|

||||

|

||||

.. |maintained| image:: https://img.shields.io/maintenance/yes/2023

|

||||

:alt: Maintenance

|

||||

|

||||

.. |pypi-downloads| image:: https://img.shields.io/pypi/dm/apeye

|

||||

:target: https://pypi.org/project/apeye/

|

||||

:alt: PyPI - Downloads

|

||||

|

||||

.. end shields

|

||||

|

||||

``apeye`` provides:

|

||||

|

||||

* ``pathlib.Path``\-like objects to represent URLs

|

||||

* a JSON-backed cache decorator for functions

|

||||

* a CacheControl_ adapter to limit the rate of requests

|

||||

|

||||

See `the documentation`_ for more details.

|

||||

|

||||

.. _CacheControl: https://github.com/ionrock/cachecontrol

|

||||

.. _the documentation: https://apeye.readthedocs.io/en/latest/api/cache.html

|

||||

|

||||

Installation

|

||||

--------------

|

||||

|

||||

.. start installation

|

||||

|

||||

``apeye`` can be installed from PyPI or Anaconda.

|

||||

|

||||

To install with ``pip``:

|

||||

|

||||

.. code-block:: bash

|

||||

|

||||

$ python -m pip install apeye

|

||||

|

||||

To install with ``conda``:

|

||||

|

||||

* First add the required channels

|

||||

|

||||

.. code-block:: bash

|

||||

|

||||

$ conda config --add channels https://conda.anaconda.org/conda-forge

|

||||

$ conda config --add channels https://conda.anaconda.org/domdfcoding

|

||||

|

||||

* Then install

|

||||

|

||||

.. code-block:: bash

|

||||

|

||||

$ conda install apeye

|

||||

|

||||

.. end installation

|

||||

|

||||

|

||||

.. attention::

|

||||

|

||||

In v0.9.0 and above the ``rate_limiter`` module requires the ``limiter`` extra to be installed:

|

||||

|

||||

.. code-block:: bash

|

||||

|

||||

$ python -m pip install apeye[limiter]

|

||||

|

||||

|

|

@ -1,20 +0,0 @@

|

|||

<!DOCTYPE html>

|

||||

<html lang="en">

|

||||

<head>

|

||||

<meta name="generator" content="simple503 version 0.4.0" />

|

||||

<meta name="pypi:repository-version" content="1.0" />

|

||||

<meta charset="UTF-8" />

|

||||

<title>

|

||||

Links for apeye

|

||||

</title>

|

||||

</head>

|

||||

<body>

|

||||

<h1>

|

||||

Links for apeye

|

||||

</h1>

|

||||

<a href="/apeye/apeye-1.4.1-py3-none-any.whl#sha256=44e58a9104ec189bf42e76b3a7fe91e2b2879d96d48e9a77e5e32ff699c9204e" data-requires-python=">=3.6.1" data-dist-info-metadata="sha256=c76bd745f0ea8d7105ed23a0827bf960cd651e8e071dbdeb62946a390ddf86c1">

|

||||

apeye-1.4.1-py3-none-any.whl

|

||||

</a>

|

||||

<br />

|

||||

</body>

|

||||

</html>

|

||||

Binary file not shown.

|

|

@ -1,6 +1,6 @@

|

|||

Metadata-Version: 2.1

|

||||

Name: boto3

|

||||

Version: 1.41.5

|

||||

Version: 1.41.4

|

||||

Summary: The AWS SDK for Python

|

||||

Home-page: https://github.com/boto/boto3

|

||||

Author: Amazon Web Services

|

||||

|

|

@ -22,7 +22,7 @@ Classifier: Programming Language :: Python :: 3.14

|

|||

Requires-Python: >= 3.9

|

||||

License-File: LICENSE

|

||||

License-File: NOTICE

|

||||

Requires-Dist: botocore (<1.42.0,>=1.41.5)

|

||||

Requires-Dist: botocore (<1.42.0,>=1.41.4)

|

||||

Requires-Dist: jmespath (<2.0.0,>=0.7.1)

|

||||

Requires-Dist: s3transfer (<0.16.0,>=0.15.0)

|

||||

Provides-Extra: crt

|

||||

|

|

@ -12,8 +12,8 @@

|

|||

<h1>

|

||||

Links for boto3

|

||||

</h1>

|

||||

<a href="/boto3/boto3-1.41.5-py3-none-any.whl#sha256=bb278111bfb4c33dca8342bda49c9db7685e43debbfa00cc2a5eb854dd54b745" data-requires-python=">= 3.9" data-dist-info-metadata="sha256=329053bf9a9139cc670ba9b8557fe3e7400b57d3137514c9baf0c3209ac04d1f">

|

||||

boto3-1.41.5-py3-none-any.whl

|

||||

<a href="/boto3/boto3-1.41.4-py3-none-any.whl#sha256=77d84b7ce890a9b0c6a8993f8de106d8cf8138f332a4685e6de453965e60cb24" data-requires-python=">= 3.9" data-dist-info-metadata="sha256=c263458fb50f5617ae8ff675602ce4b3eedbbaa5c7b7ccf58a72adc50ea35e56">

|

||||

boto3-1.41.4-py3-none-any.whl

|

||||

</a>

|

||||

<br />

|

||||

</body>

|

||||

|

|

|

|||

Binary file not shown.

|

|

@ -1,6 +1,6 @@

|

|||

Metadata-Version: 2.1

|

||||

Name: botocore

|

||||

Version: 1.41.5

|

||||

Version: 1.41.4

|

||||

Summary: Low-level, data-driven core of boto 3.

|

||||

Home-page: https://github.com/boto/botocore

|

||||

Author: Amazon Web Services

|

||||

|

|

@ -12,8 +12,8 @@

|

|||

<h1>

|

||||

Links for botocore

|

||||

</h1>

|

||||

<a href="/botocore/botocore-1.41.5-py3-none-any.whl#sha256=3fef7fcda30c82c27202d232cfdbd6782cb27f20f8e7e21b20606483e66ee73a" data-requires-python=">= 3.9" data-dist-info-metadata="sha256=867c86c9f400df83088bb210e49402344febc90aa6b10d46a0cd02642ae1096c">

|

||||

botocore-1.41.5-py3-none-any.whl

|

||||

<a href="/botocore/botocore-1.41.4-py3-none-any.whl#sha256=7143ef845f1d1400dbbf05d999f8c5e8cfaecd6bd84cbfbe5fa0a40e3a9f6353" data-requires-python=">= 3.9" data-dist-info-metadata="sha256=c54e339761f3067ceebafb82873edf4713098abb5ad00b802f347104b1200a04">

|

||||

botocore-1.41.4-py3-none-any.whl

|

||||

</a>

|

||||

<br />

|

||||

<a href="/botocore/botocore-1.40.70-py3-none-any.whl#sha256=4a394ad25f5d9f1ef0bed610365744523eeb5c22de6862ab25d8c93f9f6d295c" data-requires-python=">= 3.9" data-dist-info-metadata="sha256=ff124fb918cb0210e04c2c4396cb3ad31bbe26884306bf4d35b9535ece1feb27">

|

||||

|

|

|

|||

Binary file not shown.

|

|

@ -1,78 +0,0 @@

|

|||

Metadata-Version: 2.4

|

||||

Name: certifi

|

||||

Version: 2025.11.12

|

||||

Summary: Python package for providing Mozilla's CA Bundle.

|

||||

Home-page: https://github.com/certifi/python-certifi

|

||||

Author: Kenneth Reitz

|

||||

Author-email: me@kennethreitz.com

|

||||

License: MPL-2.0

|

||||

Project-URL: Source, https://github.com/certifi/python-certifi

|

||||

Classifier: Development Status :: 5 - Production/Stable

|

||||

Classifier: Intended Audience :: Developers

|

||||

Classifier: License :: OSI Approved :: Mozilla Public License 2.0 (MPL 2.0)

|

||||

Classifier: Natural Language :: English

|

||||

Classifier: Programming Language :: Python

|

||||

Classifier: Programming Language :: Python :: 3

|

||||

Classifier: Programming Language :: Python :: 3 :: Only

|

||||

Classifier: Programming Language :: Python :: 3.7

|

||||

Classifier: Programming Language :: Python :: 3.8

|

||||

Classifier: Programming Language :: Python :: 3.9

|

||||

Classifier: Programming Language :: Python :: 3.10

|

||||

Classifier: Programming Language :: Python :: 3.11

|

||||

Classifier: Programming Language :: Python :: 3.12

|

||||

Classifier: Programming Language :: Python :: 3.13

|

||||

Classifier: Programming Language :: Python :: 3.14

|

||||

Requires-Python: >=3.7

|

||||

License-File: LICENSE

|

||||

Dynamic: author

|

||||

Dynamic: author-email

|

||||

Dynamic: classifier

|

||||

Dynamic: description

|

||||

Dynamic: home-page

|

||||

Dynamic: license

|

||||

Dynamic: license-file

|

||||

Dynamic: project-url

|

||||

Dynamic: requires-python

|

||||

Dynamic: summary

|

||||

|

||||

Certifi: Python SSL Certificates

|

||||

================================

|

||||

|

||||

Certifi provides Mozilla's carefully curated collection of Root Certificates for

|

||||

validating the trustworthiness of SSL certificates while verifying the identity

|

||||

of TLS hosts. It has been extracted from the `Requests`_ project.

|

||||

|

||||

Installation

|

||||

------------

|

||||

|

||||

``certifi`` is available on PyPI. Simply install it with ``pip``::

|

||||

|

||||

$ pip install certifi

|

||||

|

||||

Usage

|

||||

-----

|

||||

|

||||

To reference the installed certificate authority (CA) bundle, you can use the

|

||||

built-in function::

|

||||

|

||||

>>> import certifi

|

||||

|

||||

>>> certifi.where()

|

||||

'/usr/local/lib/python3.7/site-packages/certifi/cacert.pem'

|

||||

|

||||

Or from the command line::

|

||||

|

||||

$ python -m certifi

|

||||

/usr/local/lib/python3.7/site-packages/certifi/cacert.pem

|

||||

|

||||

Enjoy!

|

||||

|

||||

.. _`Requests`: https://requests.readthedocs.io/en/master/

|

||||

|

||||

Addition/Removal of Certificates

|

||||

--------------------------------

|

||||

|

||||

Certifi does not support any addition/removal or other modification of the

|

||||

CA trust store content. This project is intended to provide a reliable and

|

||||

highly portable root of trust to python deployments. Look to upstream projects

|

||||

for methods to use alternate trust.

|

||||

|

|

@ -1,20 +0,0 @@

|

|||

<!DOCTYPE html>

|

||||

<html lang="en">

|

||||

<head>

|

||||

<meta name="generator" content="simple503 version 0.4.0" />

|

||||

<meta name="pypi:repository-version" content="1.0" />

|

||||

<meta charset="UTF-8" />

|

||||

<title>

|

||||

Links for certifi

|

||||

</title>

|

||||

</head>

|

||||

<body>

|

||||

<h1>

|

||||

Links for certifi

|

||||

</h1>

|

||||

<a href="/certifi/certifi-2025.11.12-py3-none-any.whl#sha256=97de8790030bbd5c2d96b7ec782fc2f7820ef8dba6db909ccf95449f2d062d4b" data-requires-python=">=3.7" data-dist-info-metadata="sha256=fc9a6b1aeff595649d1e5aee44129ca2b5e7adfbc10e1bd7ffa291afc1d06cb7">

|

||||

certifi-2025.11.12-py3-none-any.whl

|